Storage on Viking

Viking is a self-contained machine, therefore you will notice your normal UoY home directories are not available. This is intentional for the following reasons:

If the dedicated network link between campus and Viking goes down, it may cause slowness or jobs to fail. Instead, jobs should continue to run until the link is re-established

If a user tries to read/write from the University filestores using Viking, it is possible that they could bring the entire storage system down for the University

UoY home directories are not designed for high-performance computing. Instead Viking has its own filesystem designed for high-performance

Summary

It is vital that users continue to manage their data on the Viking cluster. There are currently 6 areas where data can be stored. The table below details the areas, the type of data you should store there, and what is/isn’t backed up.

Backed Up |

Location |

Default |

Data Type |

Deletion policy |

|

|---|---|---|---|---|---|

Home |

No |

/users/abc123 |

User code, programs, local application |

Never deleted |

|

Scratch (users) |

No |

/mnt/scratch/users/abc123/ |

2TB |

Active research data used for jobs running on Viking |

Data not touched in 90 days deleted |

Scratch (projects) |

No |

/mnt/scratch/projects |

2TB |

Active shared project data for jobs running on Viking Other types of shared data for workloads that an entire project can use. Useful for join application testing and development |

Data not touched in 90 days deleted |

Scratch (flash) |

No |

Lfs commands |

2TB |

Flash (fast) storage that can be used if your workloads are IO intensive |

Data not touched in 90 days deleted |

longship |

No |

Viking: |

2TB |

Warm data/hot data. |

Not deleted |

localtmp |

No |

/users/abc123/localtmp |

Tmp files for jobs |

Clean up period TBD |

* (can be increased on request)

Recommended workflow

The diagram below shows the recommended workflow to ensure that your data is not at risk of deletion on Scratch and makes the most of the available storage options and data management tools. Please refer to the Transferring data to Viking page for specifics on using the programs mentioend in the diagram. For further guidance on Research Data Management (RDM), please refer to the University’s overview and the Library’s practical guide.

![digraph {

node [shape=box];

local [label="Personal computer"];

storage1 [label="University Filestore"];

external [label="External collaborators / Public data"];

gdrive [label="Google Drive"];

longship1 [label="Longship"];

longship2 [label="Longship"];

compute [label="Viking compute nodes"];

scratch [label="Viking Scratch (users or projects)"];

storage2 [label="University Filestore"];

local -> longship1 [label="WinSCP/rsync/scp"];

storage1 -> longship1 [label="Globus"];

external -> longship1 [label="Globus"];

gdrive -> longship1 [label="rclone"];

longship1 -> compute [label="Read inputs"];

compute -> scratch [label="Write outputs"];

scratch:w -> longship2:w [label="rsync/scp"];

longship2:w -> storage2:w [label="Backup (Globus)"];

longship2:e -> scratch:e [label="Delete when no longer needed", style=dotted];

storage2:e -> longship2:e [label="Delete when no longer needed", style=dotted];

}](../_images/graphviz-e79782e46e8045219ff901f8a29d810ff3f9517d.png)

Locations

Home

When you log in to Viking you will land in your home directory, referred to as ~.

This directory has a size limit of 100GB and a file limit of 400,000, and should only be used for reading files from and not regularly writing to.

In particular, it is a sensible place to store software such as e.g. Python packages & virtual environments, R packages, application cache, conda environments.

It is also where Git repositories that are only read from should be stored, since they contain a large number of small files.

It should not be written during jobs, instead program output should be written to one of the scratch options.

Your home directory is located at /users/abc123:

$ pwd

/users/abc123

Tip

Some older user accounts have a localscratch shortcut in their home directory. This points to the same place as ~/localtmp and can be safely deleted.

Scratch

In your home directory is a shortcut called scratch to your personal scratch space, which is where program output should be written to.

Scratch is a high performance file system that runs Lustre, an open-source, parallel file system that supports High Performance Computing (HPC) environments.

Scratch works best when the filesystem is not at capacity, therefore to ensure it remains efficient we will be implementing a policy where data will be deleted automatically if it has not been touched in 90 days.

If you wish to keep your data please ensure it is backed up on the University filestore, moved to Longship, or backed up in the cloud. We will be making exceptions to this rule for certain project directories where groups share data between teams. This is to encourage users to not download the same datasets multiple times.

Scratch is mounted at /mnt/scratch/users/abc123.

$ cd ~/scratch

$ pwd

/mnt/scratch/users/abc123/

Tip

If you need more Scratch storage space after deleting any unused data, please email us at itsupport@york.ac.uk.

Scratch (projects)

Shared project folders can be created for you on Viking as a place for your team to easily share files. To request a shared project folder please email itsupport@york.ac.uk and let us know the project code you wish the folder to be associated with and who should be the admin(s).

The shared project folder will:

Be named after your project code

Be located in Viking’s

/mnt/scratch/projects/folderHave no quota limit associated with the folder itself, the contents within count towards their respective owner’s quota

As the folders themselves do not have quota limits, you will need to ensure you have enough personal quota space to take into account your files in your own scratch folder as well as any of your files in the shared project folder. Transferring file ownership to other users will then count towards their quota once transferred.

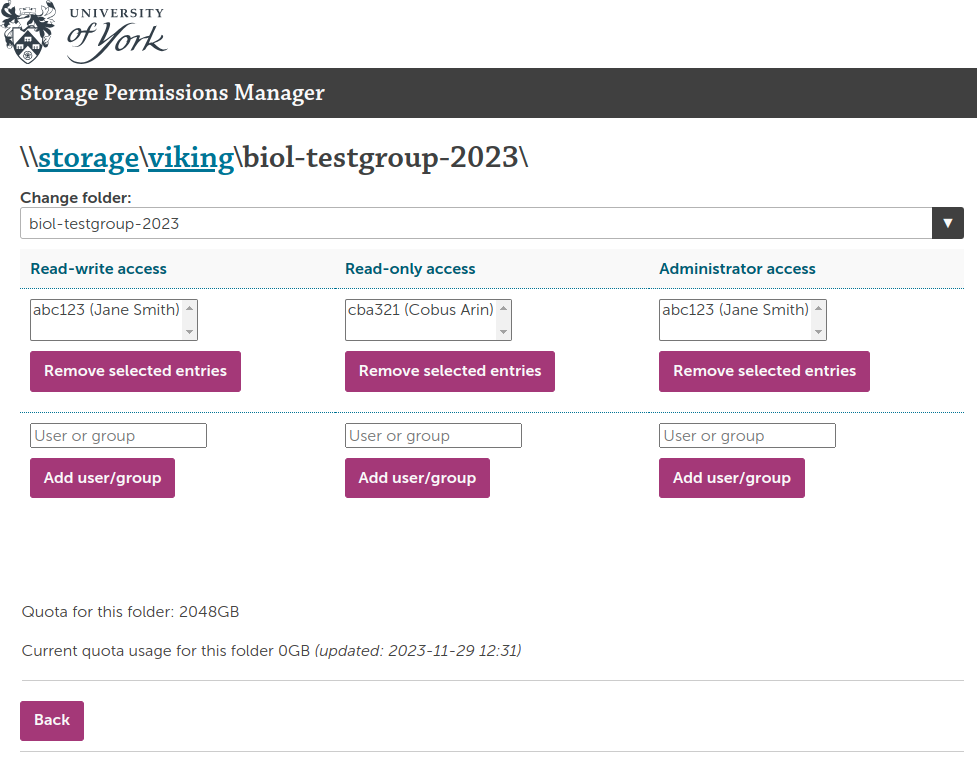

Manage user access

If you are an admin of the shared project folder you can control access to its contents for each project member through permman.york.ac.uk, by adding or removing users to the Read-write access, Read-only access and Administrator access.

NB: To check if you are an admin, attempt to follow the steps outlined below.

If you are not an admin you will not be able to see the project folder in Perm Man.

To manage the groups, log into permman.york.ac.uk, select viking from the list and then click the Go button. You’ll see a list of all the project folders you can manage. Select the desired folder from the list and click Edit access to folder. You’ll be presented with a screen similar to the one below and from here you can add and remove users from the three groups:

The Administrator access group only allows access to this page on permman - not access to the folder on Viking.

Hint

Changes made on the permman page are reflected on Viking three times a day, so there will be some delay for changes made and may take up to 24hrs to take effect.

Hint

You can only add valid Viking user accounts on this page. Groups also cannot be added, only user accounts. To check if someone has a Viking account use the id command on Viking for example id abc123, where abc123 is the username. To create an account on Viking please see creating an account.

Sub folders

The above will only allow you to add or remove access to the main shared project folder. You can manually create subfolders with the mkdir command on Viking.

If you wish to create sub folders with restricted access you can use the chmod or setfacl commands to set the permissions to those subfolders if you are familiar using those commands.

Scratch (Flash)

For data IO intensive workflows you may find better performance using our high performance flash storage. This filesystem also runs Lustre. The overall capacity is smaller therefore we ask users to clean up and delete data after use. To use flash scratch perform the following steps:

$ mkdir test-dir

$ lfs setstripe --pool flash test-dir

Then write to the directory that has the flash pool set. By default that just stripes over one Object Storage Targets (OST), to stripe across e.g. 10:

$ lfs setstripe --pool flash --stripe-count 10 test-dir

Note

It’s easiest to create a new dir with the striping set, then cp the files there (don’t use mv as this only updates the metadata, the underlying objects remain the same). For better Lustre performance it may be worth explicitly striping across multiple OSTs there as well. Striping in Lustre should only be used for large files, using it for small files can actually degrade performance. Further guidance on using Lustre to achieve maximum performance can be found here.

Attention

Please remember to delete your data after you’ve finished using the flash storage.

Longship

Where possible, you should use Longship to read data directly into your workloads, outputting files to Scratch. Longship is not a high-performance filestore but a number of groups have found no drop in performance compared to Scratch, and unlike Scratch, Longship is accessible directly from campus making data transfer and management more straight forward. Furthermore, Longship has no deletion policy, unlike Scratch.

All Viking users have personal space on Longship at /mnt/longship/users/<userid>, and if you request a Project Folder on Scratch you are also given a project folder at /mnt/longship/projects/<project-code>.

Longship is mounted read/write on the Viking login nodes and read only on the compute nodes.

To access Longship from Campus you can mount it as a network drive following the University’s documentation with the paths provided in the table above.

Local temp

Additionally, you also have access to a localtmp folder in your home directory which points to storage space on the current node which you are logged into, on fast SSD drives. It’s also an area which is not backed up so should only be used for processing data whilst it’s in use, after which the data should be backed up or archived if needed. You can access this directory at:

/users/abc123/localtmp

Checking your quotas

To check how much space you have left, run the following command:

$ myquota

Which will produce something similar to the following result where you can see your scratch quota, your home folder quota (/users/abc123), and now also your Longship quota:

User quotas:

scratch:

Filesystem used quota limit grace files quota limit grace

/mnt/scratch 1.23T 2T 2.1T - 123445 0 0 -

home:

Filesystem space quota limit grace files quota limit grace

10.10.0.15:/export/users

41274M 100G 110G 195k 400k 500k

longship:

Filesystem space quota limit grace files quota limit grace

longship.york.ac.uk:/users

0K 0K 2048G 1 0 0

To also show project quotas, use: /opt/site/york/bin/myquota --all

As the output suggests, running this command with the –all flag will also show project quotas.

$ myquota --all

User quotas:

scratch:

Filesystem used quota limit grace files quota limit grace

/mnt/scratch 1.23T 2T 2.1T - 123445 0 0 -

home:

Filesystem space quota limit grace files quota limit grace

10.10.0.15:/export/users

41274M 100G 110G 195k 400k 500k

longship:

Filesystem space quota limit grace files quota limit grace

longship.york.ac.uk:/users

0K 0K 2048G 1 0 0

Longship project quota(s):

arch-digging-2018:

Filesystem Size Used Avail Use% Mounted on

longship.york.ac.uk:/projects 2.0T 29M 2.0T 1% /mnt/autofs/longship-projects

biol-cells-2019:

Filesystem Size Used Avail Use% Mounted on

longship.york.ac.uk:/projects 2.0T 1.6T 417G 80% /mnt/autofs/longship-projects

chem-air-2024:

Filesystem Size Used Avail Use% Mounted on

longship.york.ac.uk:/projects 2.0T 0 2.0T 0% /mnt/autofs/longship-projects

phys-magnets-2024:

Filesystem Size Used Avail Use% Mounted on

longship.york.ac.uk:/projects 2.0T 1.3T 737G 65% /mnt/autofs/longship-projects

When you login to Viking you will be told if you are over quota. If this is in your home directory with the 100GB or 400,000 files limit you will need to delete or move your files to your scratch area. There is a grace period of 7 days after which you will lose access to Viking.

If you run out of scratch space on Viking, please delete any data first from your area. If you have data still in active use, please move it to Longship for medium term storage. If you still need more space email us at itsupport@york.ac.uk.

Tip

If you receive a warning that you have exceeded the 400,000 file limit in your home directory and would like a summary of where all the files are located, try this command:

cd ~ && ls -1d */ .*/ | grep -vE 'scratch|\./|\.\./' | xargs du --inodes -s

This should provide a breakdown of the number of files (technically, file descriptors, also known as inodes) by (top level) directory. If you don’t mind waiting for all the results to be sorted try the folllowing command:

cd ~ && ls -1d */ .*/ | grep -vE 'scratch|\./|\.\./' | xargs du --inodes -s | sort -n

Transferring data to and from Viking

Please see the DATA MANAGEMENT section of the left navigation bar with links to various locations and methods to transfer data to a from Viking for more information.

Backing up data

There are two main options depending on how frequently you need to access the data, the University Filestore or the Vault.

The Vault provides unlimited cold storage and is appropriate for storing sensitive data but retrieving the data can take some time and may incur a charge

The Filestore is more readily accessible, if you need more space please contact itsupport@york.ac.uk. If you use the Filestore for backing up, usually the data will be transferred to the Vault for archiving later.

Other options are available, please browse through the DATA MANAGEMENT section in the left navigation bar to see some common methods, for example the Filestore and Vault or Google drive options.

Warning

Please ensure you regularly back up your data, if there is a catastrophic failure all data on Viking could be lost!